Wd提问: ai大模型 参数

Ai批改: You're referring to the parameters of large AI models!

In the context of artificial intelligence (AI), a large model typically refers to a deep learning model with a massive number of parameters、These parameters are the internal variables of the model that are learned during training and are used to make predictions or generate outputs.

Here are some key aspects of large AI models and their parameters:

What are model parameters?

Model parameters are the internal variables of a machine learning model that are learned from the training data、They are the numbers that the model uses to make predictions or generate outputs、In a neural network, parameters include the weights and biases of the connections between neurons.

How many parameters do large AI models have?

The number of parameters in large AI models can be enormous、For example:

* BERT (Bidirectional Encoder Representations from Transformers), a popular language model, has around 340 million parameters.

* RoBERTa (Robustly Optimized BERT Pretraining Approach), a variant of BERT, has around 355 million parameters.

* The transformer model in the popular AI chatbot, LLaMA, has around 1.5 billion parameters.

* The largest models in the transformer family, such as Google's Switch Transformers, can have up to 1.6 trillion parameters!

Types of parameters in large AI models

Large AI models typically have several types of parameters, including:

1、Weights: These are the primary parameters of a neural network, which are used to compute the output of each neuron.

2、Biases: These are additional parameters that are added to the weighted sum of inputs to a neuron.

3、Embeddings: These are learned representations of input data, such as words or tokens, that are used as inputs to the model.

Challenges with large models

While having more parameters can allow a model to learn more complex patterns and relationships in data, it also presents several challenges:

1、Overfitting: Large models can easily overfit the training data, which means they become too specialized to the training data and perform poorly on unseen data.

2、Computational cost: Training and deploying large models can be computationally expensive and require significant resources.

3、Memory usage: Large models require a lot of memory to store and process.

Parameter reduction techniques

To mitigate these challenges, researchers have developed several techniques to reduce the number of parameters in large models, such as:

1、Pruning: removing redundant or unnecessary parameters from the model.

2、Quantization: reducing the precision of model parameters to reduce memory usage.

3、Knowledge distillation: transferring knowledge from a large model to a smaller one.

These techniques aim to reduce the computational cost and memory usage of large models while preserving their performance.

Do you have any specific questions about large AI models or their parameters?

0

IP地址: 202.40.103.144

搜索次数: 17

提问时间: 2025-04-21 16:47:14

热门提问:

ai搜索软件下载

ai智能语音聊天

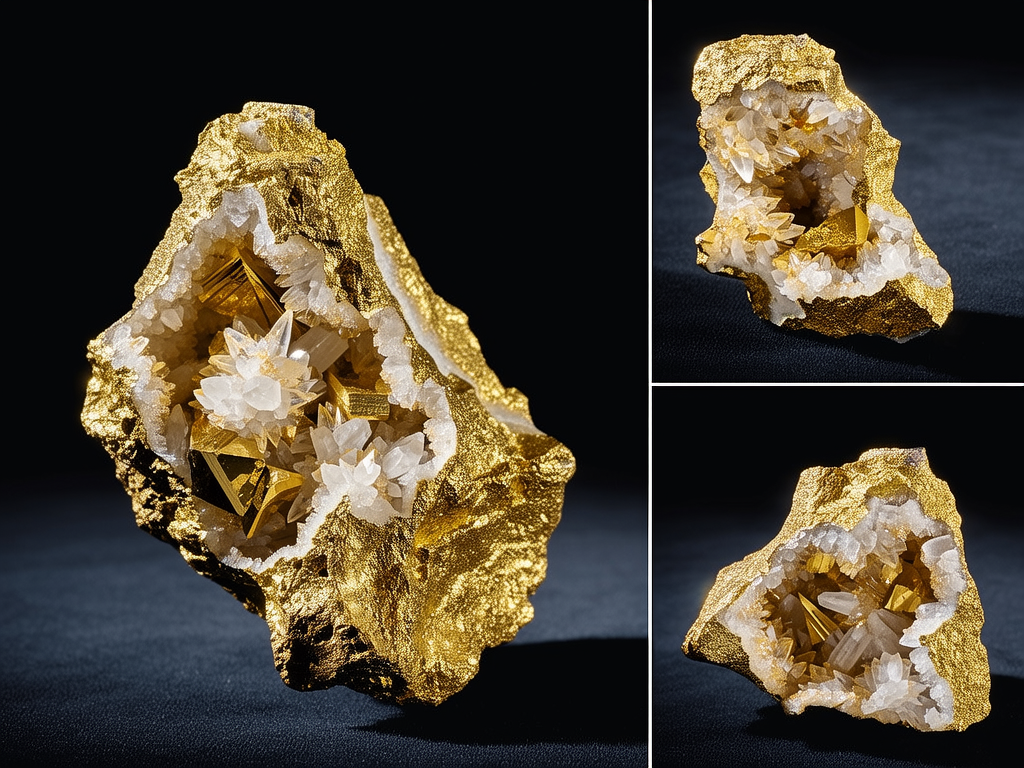

成都市那里有收购黄金

卖黄金平台

.dvag域名

ai绘画2下载

黄金期货交易时间

今日的国际黄金价格

中国中药

中国管业

豌豆Ai站群搜索引擎系统

关于我们:

三乐Ai

作文批改

英语分析

在线翻译

拍照识图

Ai提问

英语培训

本站流量

联系我们

温馨提示:本站所有问答由Ai自动创作,内容仅供参考,若有误差请用“联系”里面信息通知我们人工修改或删除。

技术支持:本站由豌豆Ai提供技术支持,使用的最新版:《豌豆Ai站群搜索引擎系统 V.25.05.20》搭建本站。